bagging machine learning explained

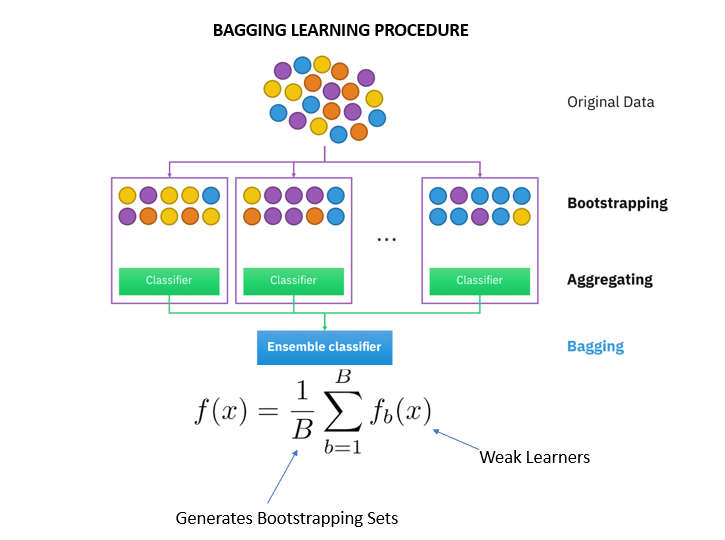

Bagging explained step by step along with its math. Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees.

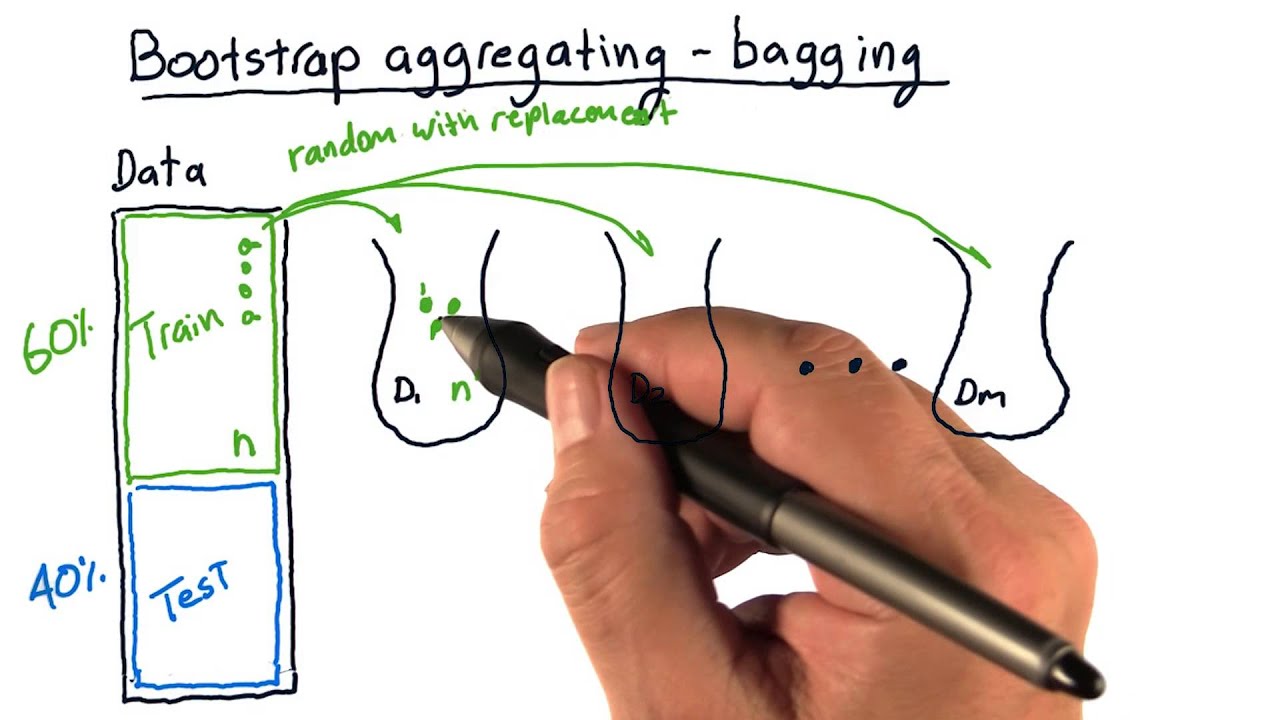

Bootstrap Aggregating Bagging Youtube

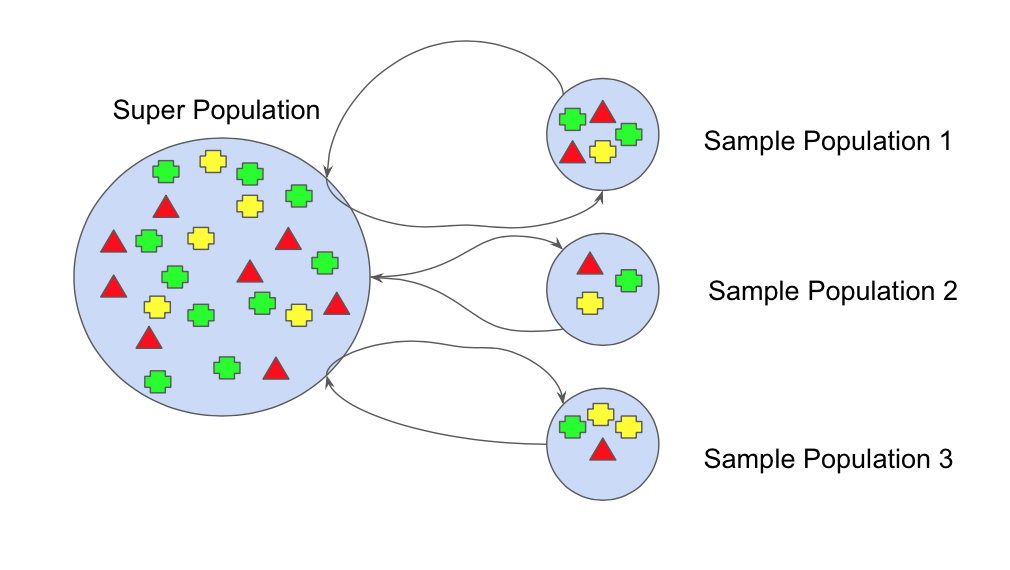

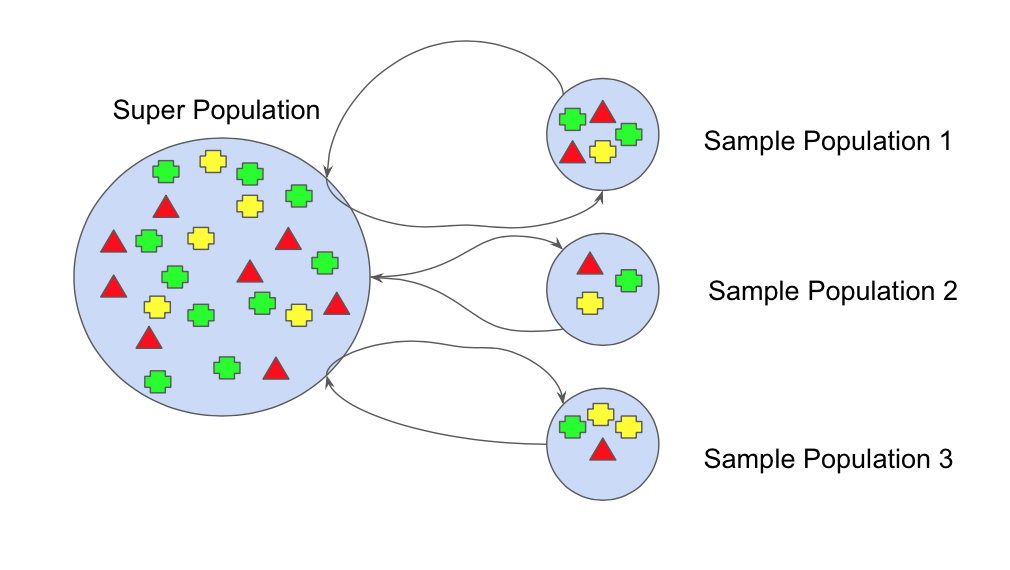

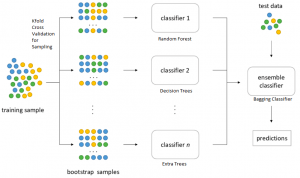

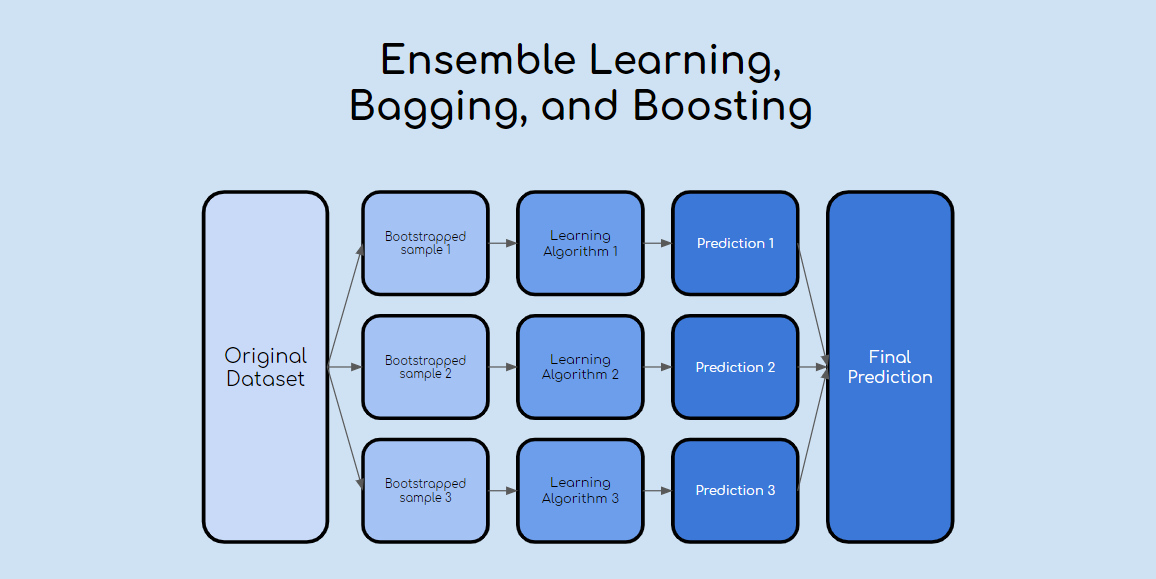

Recall that a bootstrapped sample is a sample of the original dataset.

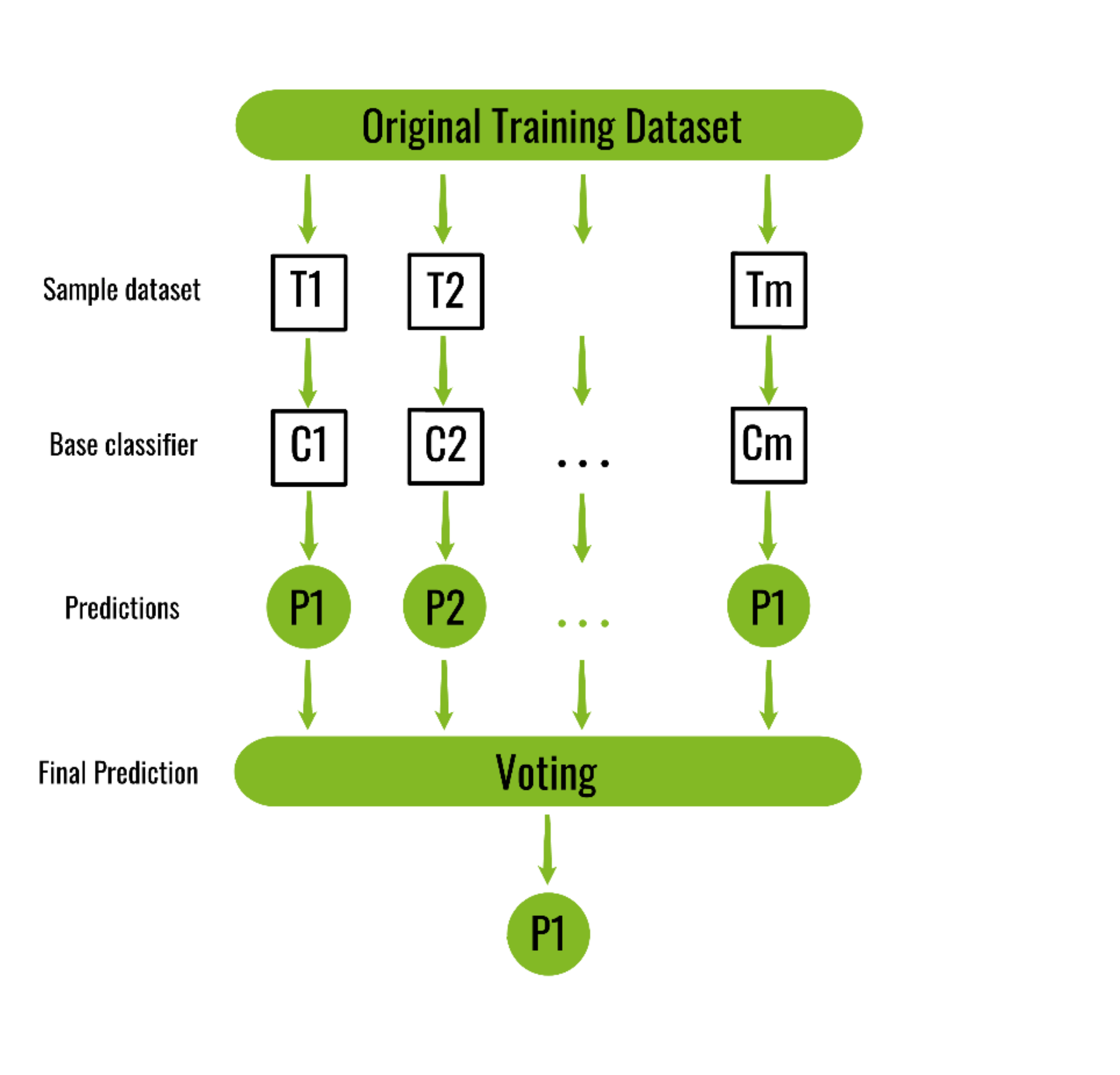

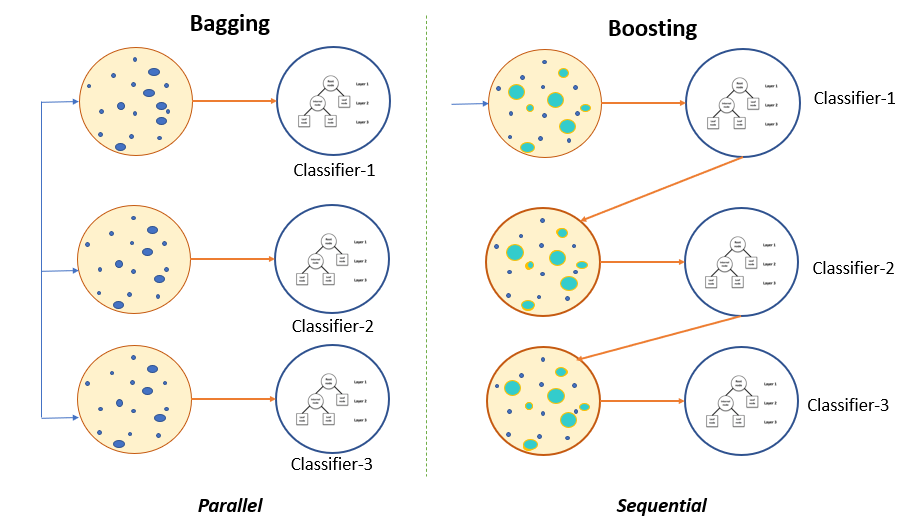

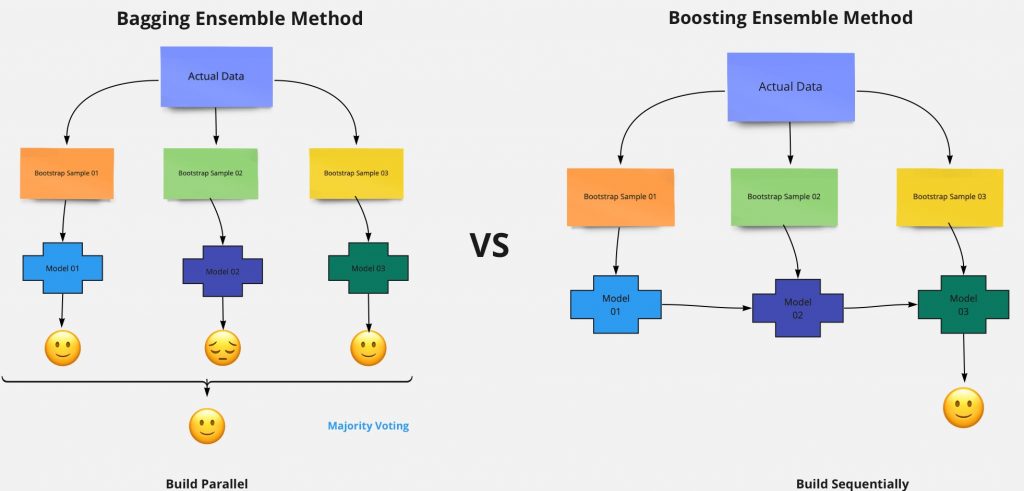

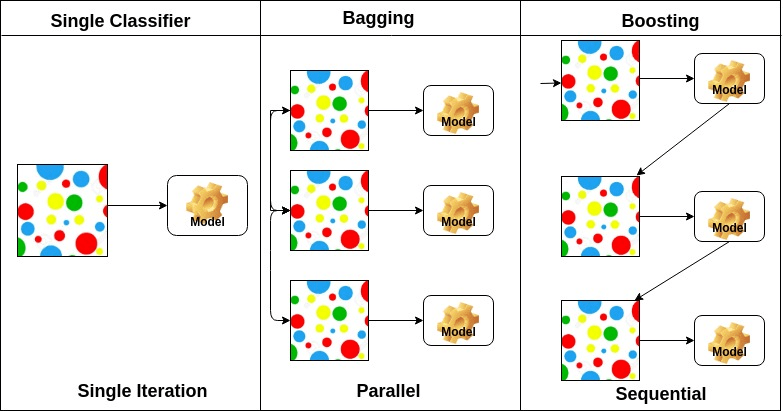

. Bagging is a parallel ensemble learning method whereas Boosting is a sequential ensemble learning method. Answer 1 of 16. Take b bootstrapped samples from the original dataset.

However bagging uses the following method. Ad Easily Build Train and Deploy Machine Learning Models. Bagging appeared first on Enhance Data Science.

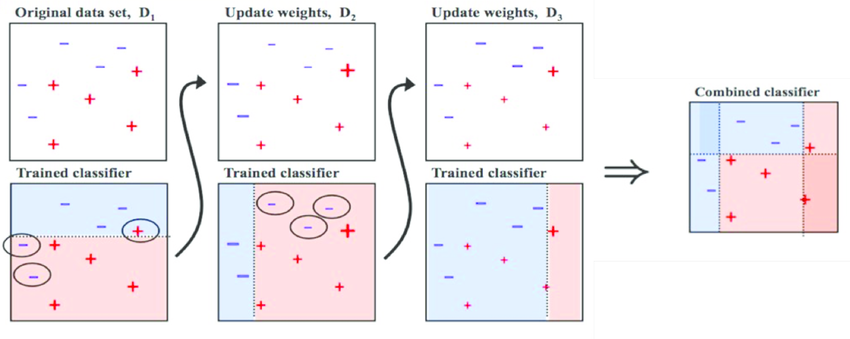

In this post we will see a simple and intuitive explanation of Boosting algorithms in Machine learning. The post Machine Learning Explained. Boosting is an Ensemble Learning technique that like bagging makes use of a set of base learners to improve the stability and effectiveness of a ML model.

Bagging is a powerful method to improve the performance of simple models and. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. Bagging is used typically when you want to reduce the variance while retaining the bias.

The 5 biggest myths dissected to help you understand the truth about todays AI landscape. Ensemble methods improve model precision by using a group of. Get a look at our course on data science and AI here.

Bagging technique can be an effective approach to reduce the variance of a model to prevent over-fitting and to increase the. Ensemble machine learning can be mainly categorized into bagging and boosting. Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of the prediction model by generating.

Ad Debunk 5 of the biggest machine learning myths. Bagging aims to improve the accuracy and performance. Both techniques use random sampling to generate multiple.

Difference Between Bagging And Boosting. This happens when you average the predictions in different spaces of the input. Lets assume we have a sample dataset of 1000.

Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. In bagging a random sample. The bagging technique is useful for both regression and statistical classification.

What they are why they are so powerful some of the different types and how they are. Machine Learning Models Explained. Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems.

Decision trees have a lot of similarity and co-relation in their. Here is what you really need to know. Ensemble machine learning can be mainly categorized into bagging and boosting.

As we said already Bagging is a method of merging the same type of predictions. Ensemble learning is a machine learning paradigm where multiple models often called weak learners are trained to solve the same problem and combined to get better. Bagging is a powerful ensemble method that helps to reduce variance and by extension prevent overfitting.

The idea behind a. Here it uses subsets bags of original.

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Ml Bagging Classifier Geeksforgeeks

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Difference Between Bagging And Random Forest Machine Learning Supervised Machine Learning Learning Problems

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium

Learn Ensemble Methods Used In Machine Learning

Bootstrap Aggregating By Wikipedia

A Bagging Machine Learning Concepts

Ensemble Learning Bagging Boosting Ensemble Learning Learning Techniques Deep Learning

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

Boosting Machine Learning Example Sale 51 Off Empow Her Com

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging Classifier Python Code Example Data Analytics

Ensemble Learning Bagging And Boosting Explained In 3 Minutes